Insight Report | MindXO Research

As AI becomes embedded in core enterprise operations, organizations often treat AI governance, risk management, and compliance as interchangeable.

In practice, these functions are frequently bundled and managed through the same processes. This leads to compliance-driven programs with weak strategic steering and risk controls that do not reflect how AI systems actually operate.

The issue is not lack of effort, but a failure to distinguish between fundamentally different functions.

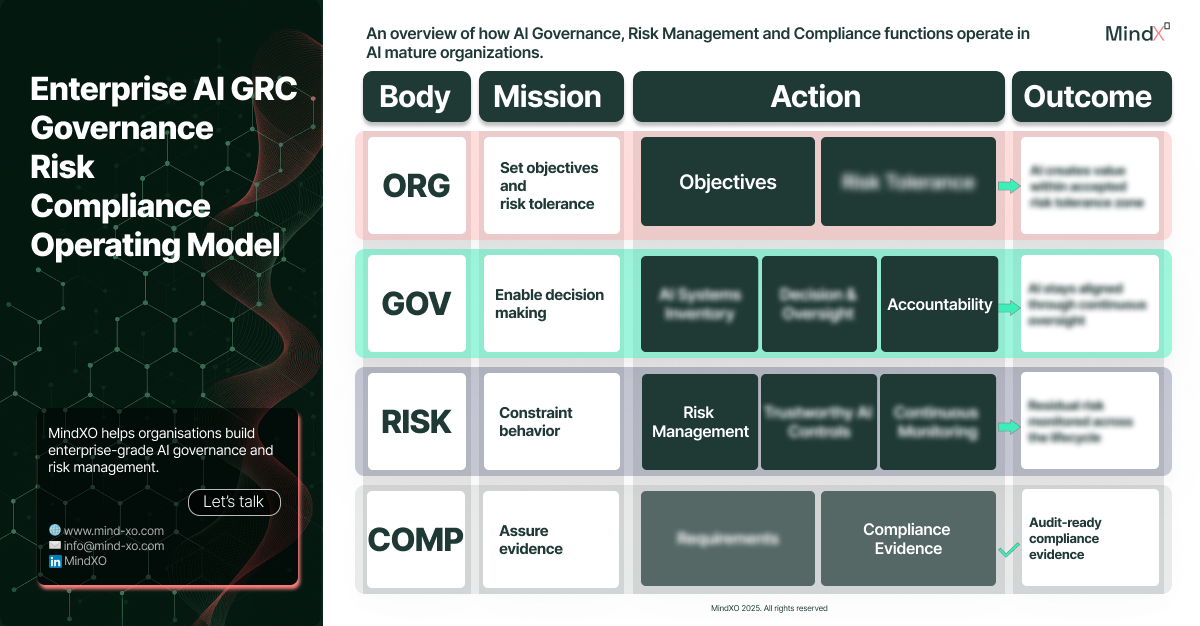

A one-page operating model clarifying the distinct roles of AI governance, risk management, and compliance across the AI lifecycle.

→ Download the ModelMindXO Enterprise AI Governance Risk and Compliance

(Operating Model)

A useful starting point is to state something that is often left implicit: organizations do not deploy AI in order to be ethical, trustworthy, or compliant. They deploy AI to achieve business objectives.

Those objectives may vary -efficiency, growth, resilience, better decisions- but they are always strategic in nature.

Trustworthiness, safety, fairness, and compliance do not define why AI exists in organizations. They define the conditions under which it is acceptable to operate.

Once this distinction is made, a great deal of confusion disappears. Ethical or trustworthy AI is not an end state to be achieved. It is a set of constraints that shape how AI systems should behave in pursuit of organizational goals.

Governance, risk management, and compliance exist to enforce those constraints in different ways, at different levels.

At the organizational level, AI-related decisions are deceptively simple. Leadership must decide what it wants AI to achieve and how much risk it is willing to accept in doing so.

These two decisions, objectives and risk tolerance, form the foundation of any serious AI program.

Crucially, risk tolerance is not a technical parameter. It is a strategic choice that reflects the organization’s appetite for operational disruption, reputational exposure, regulatory scrutiny, and societal impact. No model architecture or control framework can substitute for this decision.

If risk tolerance is left implicit or delegated entirely to technical teams, governance and compliance mechanisms will inevitably drift. Everything that follows in the AI lifecycle exists to ensure that systems remain aligned with these two strategic choices

AI governance is often described in terms of principles, ethics boards, or policy documents. While these may play a role, they do not constitute governance on their own. Governance is, at its core, a system for making and enforcing decisions.

In an AI context, governance ensures that the organization can continuously steer its AI systems toward business objectives while keeping risk exposure within accepted limits. This requires clarity on what AI systems exist, who has authority over them, who is accountable for their outcomes, and how issues are escalated when risk thresholds are approached or exceeded.

Governance therefore operates across the entire AI system lifecycle. It does not end at deployment, nor does it intervene only after incidents occur. Its purpose is to ensure that the right decisions can be made, by the right people, at the right time whether that decision is to proceed, modify, pause, or retire an AI system.

Importantly, governance does not make AI systems safe or compliant by itself. It creates the conditions under which safety and compliance can be enforced

If governance is about who decides, AI risk management is about what must not happen.

Risk management translates abstract risk tolerance into concrete controls applied to AI systems as they are designed, deployed, and operated. It identifies where AI behavior may lead to harm, treats those risks through technical and organizational controls, and monitors residual exposure as systems evolve and interact with their environment.

This is where the concept of trustworthy AI properly belongs. Trustworthiness is not a purpose or a promise. It is a collection of control objectives such as reliability, robustness, explainability, and fairness used to mitigate specific risks.

An AI system can be technically trustworthy and still be strategically misaligned. It can be compliant and still create unacceptable business or societal outcomes. Risk management exists to surface these mismatches and to ensure that residual risk remains within the boundaries set at the organizational level.

Because AI systems change over time, risk management cannot be static. Continuous monitoring is not a maturity enhancement; it is a necessity.

Compliance is often the most visible aspect of AI control, largely because it produces tangible artefacts: policies, reports, certifications, and audit trails.

AI compliance does not define objectives, determine risk tolerance, or manage risk. It identifies applicable internal and external requirements - eg. organization's policies, vendors' contract, regulation - and provides evidence that those requirements are being met. Its function is assurance, not steering.

A compliance-first approach to AI can create a false sense of security. An organization may be able to demonstrate alignment with regulations and standards while still operating AI systems that are poorly governed or misaligned with strategic intent. Documentation is not control; it is proof that control mechanisms exist and are functioning as intended.

Understanding the distinction between AI governance, risk, and compliance is only the first step. The real challenge for organizations lies in translating this conceptual clarity into operating mechanisms that work in practice across business units, technologies, and the full AI system lifecycle.

This is where many AI initiatives stall. Organizations may have policies, principles, or compliance artefacts in place, yet still struggle to answer basic questions: which AI systems are active, who is accountable for them, how risk is monitored over time, and how strategic intent is enforced as systems evolve.

At MindXO, we work with organizations to bridge this gap. Our focus is not on adding another layer of frameworks or documentation, but on helping enterprises design and operationalize AI governance, risk, and compliance structures that are aligned with their business objectives and risk tolerance.

We support this journey through:

- structured AI Maturity and Readiness Assessment,

- design of AI Governance and Operating Models,

- AI Risk Management Frameworks embedded into the system lifecycle.

The objective is simple: enable organizations to scale AI with confidence, without sacrificing strategic control or resilience. If you would like to explore how this model applies to your organization, or assess where your current AI practices sit across governance, risk, and compliance, we invite you to start a conversation with us.

To help organisations navigate these concepts, we’ve created a one-page operating model clarifying the distinct roles of AI governance, risk management, and compliance across the AI lifecycle..

A one-page guide to help organisations distinguish principles, practices, and governance in AI initiatives.

→ Download the Model