> MindXO Insight | Analysis

AI risk is often framed as a technical challenge, centered on model accuracy, bias, hallucinations, or cybersecurity.

These issues matter, but they rarely explain why AI incidents escalate into major organizational failures.

The underlying reason is that AI risk does not remain confined to the system where it originates. It propagates through processes, decisions, and organizational structures, ultimately materializing as strategic, financial, or reputational exposure.

This dynamic makes risk-based AI governance essential, with oversight calibrated to organizational impact rather than technology alone.

A one-page operating model clarifying the distinct roles of AI governance, risk management, and compliance across the AI lifecycle.

→ Download the ModelMindXO AI Risk Boundaries in Organizations

Over the past two years, “AI risk” has become a ubiquitous expression. It appears in board presentations, regulatory consultations, strategy decks, and media headlines.

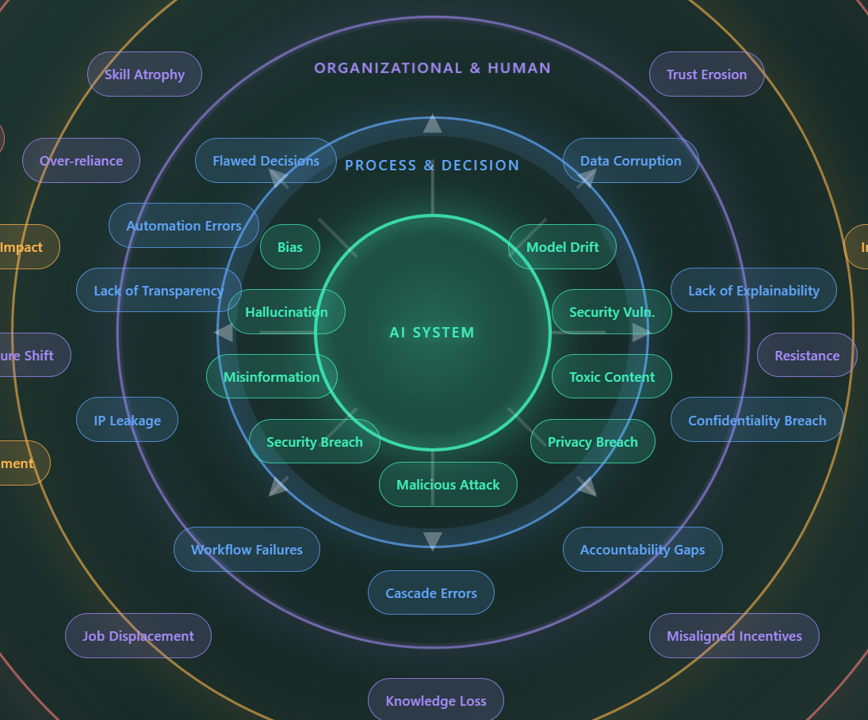

Core AI System Risks sit at the center. These are the technical vulnerabilities most commonly discussed: bias, hallucination, model drift, security breaches, privacy violations, misinformation, and toxic content. Responsibility for these risks typically sits with technical teams or vendors.

Process and Decision Risks emerge when AI outputs flow into business operations. Flawed automated decisions, cascade errors, IP leakage, confidentiality breaches, and accountability gaps appear at this layer. The risk is no longer contained within the system; it is shaping how work gets done.

Organizational and Human Risks arise as AI becomes embedded in the workforce. Over-reliance on AI outputs, skill atrophy, trust erosion, employee resistance, and knowledge loss affect organizational capability and culture. These risks are often invisible until they manifest as performance gaps or cultural dysfunction.

Strategic and Financial Risks represent the business-level consequences. Legal liability, compliance failures, revenue impact, investor concern, and competitive loss sit at this layer. By the time risk reaches here, it has typically crossed multiple organizational boundaries.

External and Societal Risks form the outermost layer. Reputation damage, customer harm, regulatory action, public backlash, and systemic risk extend beyond organizational boundaries. These are the terminal impacts where internal failures become external consequences.

This layered view of AI risk is consistent with and supported by academic research. The MIT AI Risk Repository, one of the most comprehensive efforts to catalogue AI-related risks, provides a valuable foundation for understanding the breadth of exposure organizations face.

The repository spans technical concerns such as model failures and security vulnerabilities, human–AI interaction risks including over-reliance and automation bias, and broader organizational and societal risks linked to governance gaps and misuse.

It reinforces a critical insight: AI risk cannot be reduced to model behavior alone. Risk emerges from the interaction between technology, people, and institutions.

The framework presented here builds on this foundation but extends it in a crucial direction: it aims to illustrate how risks evolve once AI systems are operational e.g. how a technical failure in one layer cascades into strategic or reputational consequences in another.

This is the gap that a propagation-based view fills: understanding not just what risks exist, but how they move, amplify, and compound across organizational boundaries.

Understanding the layers is only the first step. What makes AI risk particularly challenging is the way it moves between them.

Three dynamics drive this propagation:

Risk amplification. A single bias in an AI model can cascade through thousands of automated decisions before detection, then trigger regulatory scrutiny and reputation damage. The impact grows exponentially as it moves outward.

Detection latency. Risks often manifest far from their origin. Model drift may first appear as customer complaints, employee workarounds, or revenue anomalies, not in technical metrics. By the time the root cause is identified, consequences have already accumulated.

System interconnection. AI systems rarely operate in isolation. They connect to legacy systems, human processes, and external partners. Risks propagate through these interfaces, often crossing organizational boundaries in ways that are difficult to trace.

Given how AI risk propagates, governance approaches that treat all AI systems equally are fundamentally inadequate.

A chatbot answering general questions does not carry the same organizational exposure as an AI system influencing credit decisions, medical diagnoses, or regulatory filings.

Risk-based governance recognizes this reality. It calibrates oversight, controls, and accountability to the potential impact of each AI system, not to the technology itself, but to the consequences it can generate across the five layers of organizational exposure.

This means:

Proportionate controls. High-risk AI applications warrant continuous monitoring, senior accountability, and robust escalation paths. Lower-risk applications may require only periodic review. Resources flow to where exposure is greatest.

End-to-end visibility. Governance must track not just AI system performance, but how AI outputs flow through processes, decisions, and interactions. Risk cannot be managed if it cannot be seen.

Clear accountability. As risk spread across organizational boundaries, accountability must be defined at each layer. When AI-influenced decisions go wrong, it must be clear who owns the consequence.

Adaptive oversight. Risk is not static. As AI systems scale, integrate with more processes, and influence more decisions, their risk profile changes. Governance must evolve accordingly.

Many organizations approach AI governance as a compliance exercise: documenting risks, establishing policies, and checking boxes. This creates an illusion of control while exposure continues to grow.

True governance is about oversight and accountability, not compliance. It requires understanding where AI is used, how much risk is being generated, and whether that risk aligns with the organization's tolerance. It requires structures that detect risk movement early, contain it effectively, and escalate it appropriately.

AI redistributes risk across organizations in ways that are not always visible. Governance provides the structure needed to keep that redistribution within acceptable boundaries. Its purpose is not to constrain innovation, but to ensure that risk remains visible, accountability remains clear, and exposure remains intentional.

AI risk is an organizational problem with technology at its origin. The question for boards and executive teams is not only whether their AI models are accurate, but whether their organizations can absorb and manage the consequences when those models deviate from intended objectives.

This requires governance that goes beyond the algorithm. A governance that sees risk as it propagates, responds to it proportionately, and maintains control across all five layers of exposure.

Approached this way, risk-based AI governance becomes an enabler of confidence rather than an obstacle to progress. It allows organizations to adopt AI knowing that risks are understood, monitored, and deliberately managed from the algorithm to the boardroom.

To help organisations navigate these concepts, we’ve created a one-page operating model clarifying the distinct roles of AI governance, risk management, and compliance across the AI lifecycle..

A one-page guide to help organisations distinguish principles, practices, and governance in AI initiatives.

→ Download the Model