Insight Report | MindXO Research

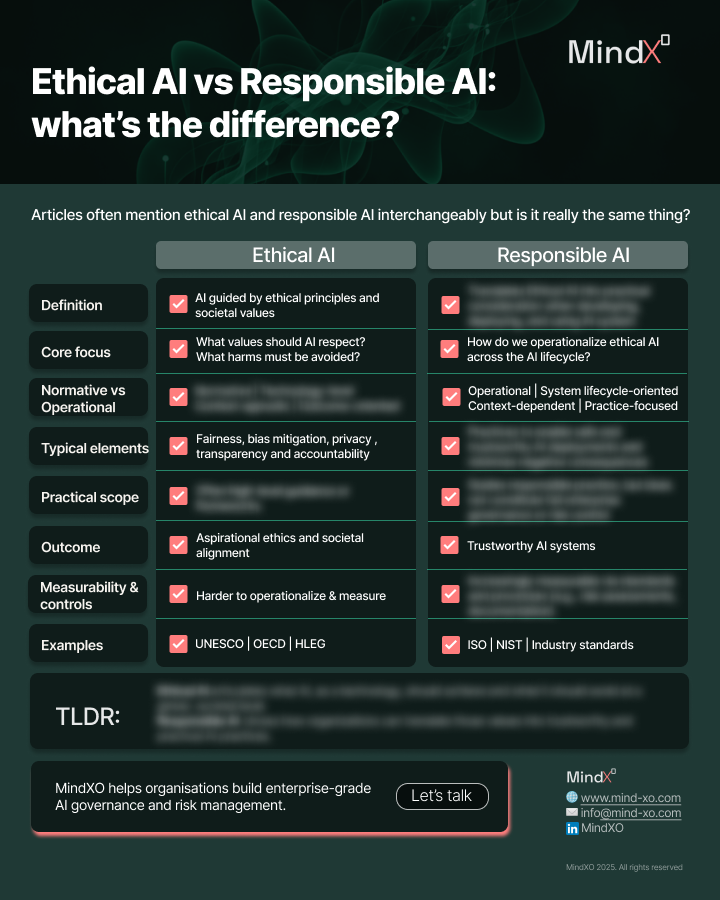

As artificial intelligence becomes embedded into core business operations, terms like Ethical AI and Responsible AI are often used interchangeably.

But they are not the same thing. Understanding the distinction is more than a semantic exercise. It shapes how organisations design, deploy, and govern AI systems in practice.

A one-page guide to help organisations distinguish principles, practices, and governance in AI initiatives.

→ Download the cheatsheetMindXO Ethical AI vs Responsible AI (cheatsheet)

Ethical AI focuses on the societal and moral expectations placed on AI as a technology.

It asks fundamental questions such as:

What values should AI respect?

What harms must be avoided?

What rights must be protected?

What outcomes are unacceptable regardless of context?

Ethical AI is therefore:

Normative: rooted in values and principles

Technology-level: applying broadly across sectors and use cases

Context-agnostic: stable across industries and implementations

Outcome-oriented: focused on societal impact

This framing is reflected in global initiatives such as UNESCO’s Recommendation on the Ethics of AI, the OECD AI Principles, and the EU High-Level Expert Group’s Ethics Guidelines.

Ethical AI sets direction. It defines what AI technology should achieve and what it should never do.

Rather than redefining values, Responsible AI focuses on how organisations translate ethical principles into practical considerations when developing, deploying, and using AI systems.

It addresses questions like:

How do we operationalise fairness in model development?

What does accountability look like in deployment and use?

How do we minimise harm across the AI lifecycle?

How can AI systems be made trustworthy in real-world contexts?

Responsible AI is therefore:

1. Operational

2. System- and lifecycle-oriented

3. Context-dependent

4. Practice-focused

This perspective is reflected in standards and frameworks such as ISO 42001:2023 and the NIST AI Risk Management Framework, which emphasise trustworthy, safe, transparent, and reliable AI systems. Responsible AI shows how ethical expectations can be implemented in practice but it does not, on its own, constitute full enterprise governance or risk management.

Many organisations believe they are “doing Responsible AI” when they are still operating at the level of principles and guidelines.

As AI scales across business units, vendors, and use cases, this gap becomes visible:

1. Ethics define intent, not control.

2. Responsible practices guide behaviour, but often lack decision authority.

3. Risk accumulates across systems, not just individual models.

As AI becomes business-critical, organisations must move beyond principles alone. The next challenge is enterprise-grade AI governance and risk management with clear ownership, decision rights, and controls aligned with business impact.

TLDR:

1. Ethical AI defines what AI should achieve or avoid at a global, societal level

2. Responsible AI translates those ethical expectations into lifecycle practices

3. Enterprise AI governance determines who decides, when, and under what risk

Understanding where your organisation stands across these layers is the first step toward sustainable AI adoption.

To help organisations navigate these concepts, we’ve created a one-page comparison cheatsheet summarising the key differences between Ethical AI and Responsible AI, with references to global standards and frameworks.

A one-page guide to help organisations distinguish principles, practices, and governance in AI initiatives.

→ Download the cheatsheet