Insight Report | MindXO Research

As artificial intelligence becomes embedded in core operations, organizations often rely on existing GRC frameworks for oversight.

In practice, AI strains these assumptions and exposes gaps between formal compliance and effective control.

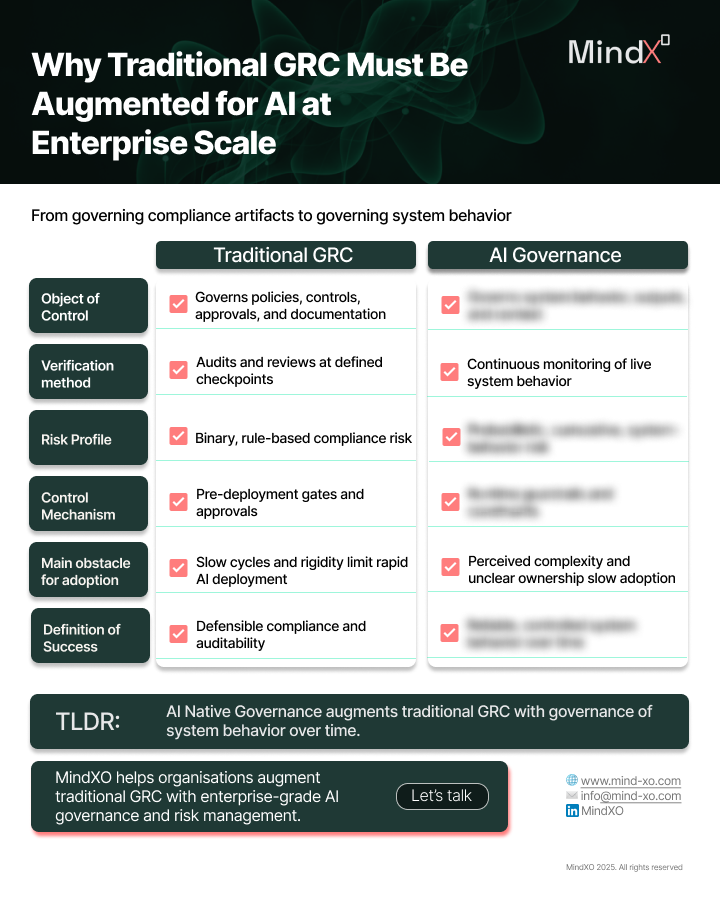

A one-page guide to augmenting GRC for enterprise AI.

→ Download the guideMindXO Traditional GRC vs AI Governance

Governance, Risk, and Compliance (GRC) frameworks underpin how organizations establish control, accountability, and trust.

By translating regulation into policies, controls, and assurance mechanisms, they have proven resilient across industries and technologies.

Artificial intelligence does not invalidate this foundation. AI systems still require accountability, documentation, formal approvals, and regulatory alignment.

Yet as AI becomes embedded in core business operations, a growing tension emerges: governance remains formally sound, but effective control increasingly feels fragile.

This does not signal a failure of GRC, but the emergence of governance demands beyond the layers it was designed to address.

AI reshapes enterprise risk by introducing new risks through the behavior of individual systems and through how AI capabilities are adopted and used across the organization.

At one layer, risk is system-specific, arising from how models are designed, deployed, and operated.

At another, risk is organization-wide, stemming from governance choices, access to tools, and external exposure.

These layers mean AI risk is no longer confined to discreteprojects or failures, but spans system behavior and organizational exposureacross functions.

This layered risk profile creates a structural challenge for traditional GRC.

GRC is effective at governing organization-wide risk through policies, standards, and baseline controls, and at establishing accountability and regulatory alignment. However, it is not designed to jointly govern system-level and enterprise-level AI risks.

When these layers are treated asone, governance becomes either too abstract to manage system behavior or too rigid to scale.

As a result, organizations can remain compliant while still accumulating unmanaged exposure.

Governing AI in organizations builds on existing GRC foundations and extends them where needed.

Artifact-level governance must be complemented by mechanisms that address behavior over time. This extends governance beyond static controls toward continuous observation and early intervention, and reframes risk not only as an event, but as a trajectory

When GRC is augmented with behavior-level governance, organizations gain a more complete form of control. Compliance and accountability remain intact, while emerging risks are detected earlier and addressed more precisely.

Governance shifts from retrospective assurance to an active capability that evolves alongside AI systems.

Traditional GRC remains a necessary foundation for governing AI, but on its own it no longer provides sufficient visibility into how AI systems behave in dynamic environments.

Organizations that augment GRC with AI-native governance capabilities are better positioned to maintain control, build trust, and scale AI responsibly.

The challenge is not whether AI should be governed, but whether governance reaches the layers where AI risk actually forms.

To help organisations navigate these concepts, we’ve created a one-page guide summarising how traditional GRC must be augmented to cater for new AI risks.